Vibe Directing

We're not in Hollywood any more!

True revolution isn’t about incrementally improving the old way of making films;

it’s about creating a new way entirely — SNUK3M

When it comes to AI and filmmaking, many people are focused on how AI can make each phase of the existing process faster and cheaper—whether it’s automating editing, enhancing VFX, or streamlining color grading.

I think they are missing the point. AI is not incremental, it’s a paradigm shift.

With AI at the center, new creative teams will emerge—teams that will gleefully ignore the traditional Hollywood workflow. Instead, these teams will naturally collaborate in real time, shaping scenes dynamically as they go. This new approach isn’t just an evolution; it’s a fundamental transformation.

And it’s called Vibe Directing.

Here’s how Vibe Directing will work.

Vibe Directing will be a process that feels more like a conversation or like playing a collaborative turn based game than following a rigid production schedule. Imagine a group of filmmakers—the director, cinematographer, editor, and sound designer—sitting around a table, each with their own workstation, all connected to a concurrent central AI-driven editing environment.

Each person in the room (e.g. Director, Editor, Cinematographer, etc) is staring at the same scene and is interacting with a GPT that is custom build to be their AI assistant. Each assistant has rights to modify the working scene in real time as conversation unfolds.

Like a game of Magic: The Gathering, they take turns in structured rounds, each making their creative moves, building on the previous render, and continuously refining the scene together. The AI acts as both a facilitator and a participant, offering insights, suggesting tweaks, and even making subtle changes between turns. Each round brings the scene closer to the director’s vision, blending creativity, intuition, and machine intelligence in a real-time, collaborative flow.

Magic: The Gathering (MTG) is a collectible card game where players take on the roles of powerful wizards known as Planeswalkers, using decks of cards to summon creatures, cast spells, and manipulate the game state to defeat their opponents. The game is structured in a turn-based format, where players alternate taking actions in a series of clearly defined phases. Each turn, a player draws cards, generates mana to cast spells, summons creatures, and attacks their opponent while the other player has opportunities to respond. This turn-based structure allows for strategic planning and counterplay, as each move builds on the previous one, creating a dynamic and evolving game state.

Setup Phase: Creating the Static Starting Point

The purpose of the setup phase in Vibe Directing is to establish a static image starting point—a rough, foundational version of the scene that includes a basic edit, dialogue, foley, and music. This initial version serves as a canvas for collaborative refinement, giving the team a concrete base to build on. The goal is not to achieve perfection but to create a draft that captures the essence of the scene, providing a shared point of reference before the iterative rounds begin.

Steps:

Load Storyboards and Initial Shot Lists: Prior the vibe session, the director took the script and created storyboards and a starting shot list - perhaps with other teams or simply by themselves. Then they preload the rough cut using storyboards and shot lists to create the first static scene, including basic framing and dialogue placement.

Load Mood Boards and Visual References: Bring in AI-generated mood boards and example images to establish the look and feel of the scene.

Load Pacing and Editing Examples: Include clips or reference scenes that showcase the desired pacing and rhythm. AI does an initial pass.

Load Audio Elements: Have AI to a first pass and integrate placeholder foley, dialogue, and music to give the scene a preliminary sound profile.

Render: The first still image scene with edit points is rendered with rough sound.

Session Initialization: All team members (director, cinematographer, editor, sound designer) concurrently join the same AI-driven session. Each has access to a chat window that has their own custom GPT with them for live communication and the ability to see the current static scene displayed together.

Scene One: Establishing the Vibe

The purpose of the initial vibe session is to capture the essence of the characters and the scene without worrying about the final polished look. This session is all about defining who the characters are, how they sound, how they move, and how they interact within the space. The Feel. The goal is to establish the emotional core and the dynamic between characters while ensuring the scene’s vibe feels authentic. The visual style in this session remains a slightly higher-fidelity pencil drawing—clear enough to convey character expression and motion, but still sketch-like to allow for flexible iteration.

Golden Rules of the First Vibe Session:

It’s a conversation - Imagine each person in the room can freely talk to their individual GPT and each GPT is connected to the scene and the shots. Each person can have a conversation that impacts the scene - all simultaneously. Each new decision is then rendered immediately.

It’s an Iterative Process - Everyone knows the first iteration is not expected to be great. The goal is not to perfect the scene in one go but to get a rough, foundational pass in place.

Make it Slightly Better Each Round - Each round should focus on making the scene just a bit better than before. No big leaps or final touches—just gradual, small improvements.

Speed Over Perfection - Keep the rounds quick and fluid. The goal is to maintain momentum and avoid overthinking any single change.

Collaborative, Not Competitive - Each team member makes adjustments in parallel, contributing to a collective effort rather than competing to “fix” the scene.

Keep the Vibe as the North Star - Every adjustment should align with the intended vibe. Whether it’s a tweak to the character’s tone or the movement of the camera, it should always serve the scene’s core emotion.

Embrace the Roughness - Early rounds will feel raw and incomplete—that’s expected. The purpose is to uncover what works and what doesn’t without worrying about polish.

The Goal of the First Vibe Directing Session

The first Vibe Directing session is crucial because it establishes the foundational tone and character dynamics that will guide the entire film. This session is all about setting the emotional core of the story, defining the primary characters, and capturing the raw essence of the scene. By focusing on the fundamental elements—like how characters look, sound, and interact—the first session creates a consistent framework that future sessions can build upon. Once this groundwork is laid, every subsequent session benefits from a clear vision and cohesive starting point, allowing for more nuanced adjustments and refinements.

Establishing Character Vibe - Define how each character should look, move, and sound, creating a consistent presence that informs their portrayal throughout the film.

Setting the Emotional Tone - Capture the underlying mood of the scene, whether it’s tense, intimate, or confrontational, to ensure every future adjustment aligns with the intended feeling.

Defining the Visual Language - Choose a baseline aesthetic that will be consistently referenced, from lighting and camera movement to color grading and composition.

Building the Character Dynamics - Explore how characters interact, from body language to vocal delivery, establishing a rhythm that later scenes will naturally follow.

Creating a Baseline for Iteration - Produce a rough but intentional version that acts as a blueprint for future improvements, allowing the team to progressively refine while maintaining the original vision.

Laying the Groundwork for Consistency - Once the core vibe is established, any changes made later can propagate through the project without disrupting the foundational tone.

Vibe Directing in Action:

Team Analysis: The team reviews the initial static shot edit of the scene, discussing how the characters look, sound, and interact. They focus on whether the blocking makes sense, whether the camera movement complements the performance, and if the overall feel matches the director’s vision.

Director’s Adjustments - Defining Character Motivation and Performance:

Director: "Alright, here’s what I’m thinking. Your character just got hit with some heavy news, but he’s not the kind of guy to break down. He’s holding it together, but there’s that tension—like he’s keeping it all just beneath the surface. Kind of like when Denzel Washington’s character in Fences is trying to stay composed after a confrontation—you can see it all boiling underneath, but he’s keeping himself in check. You know what I mean?"

AI Assistant: "Understood. So, more controlled emotion, but enough to hint that he’s struggling to keep it in. Should I add a bit of hesitation in his voice to show that internal conflict?"

Director: "Yeah, just a touch. He’s the kind of guy who doesn’t want to let anyone see him crack. Maybe his voice wavers for just a moment before he pulls it back."

AI Assistant: "Got it. I’ll add a slight pause in the middle of the line, just enough to catch that hesitation."

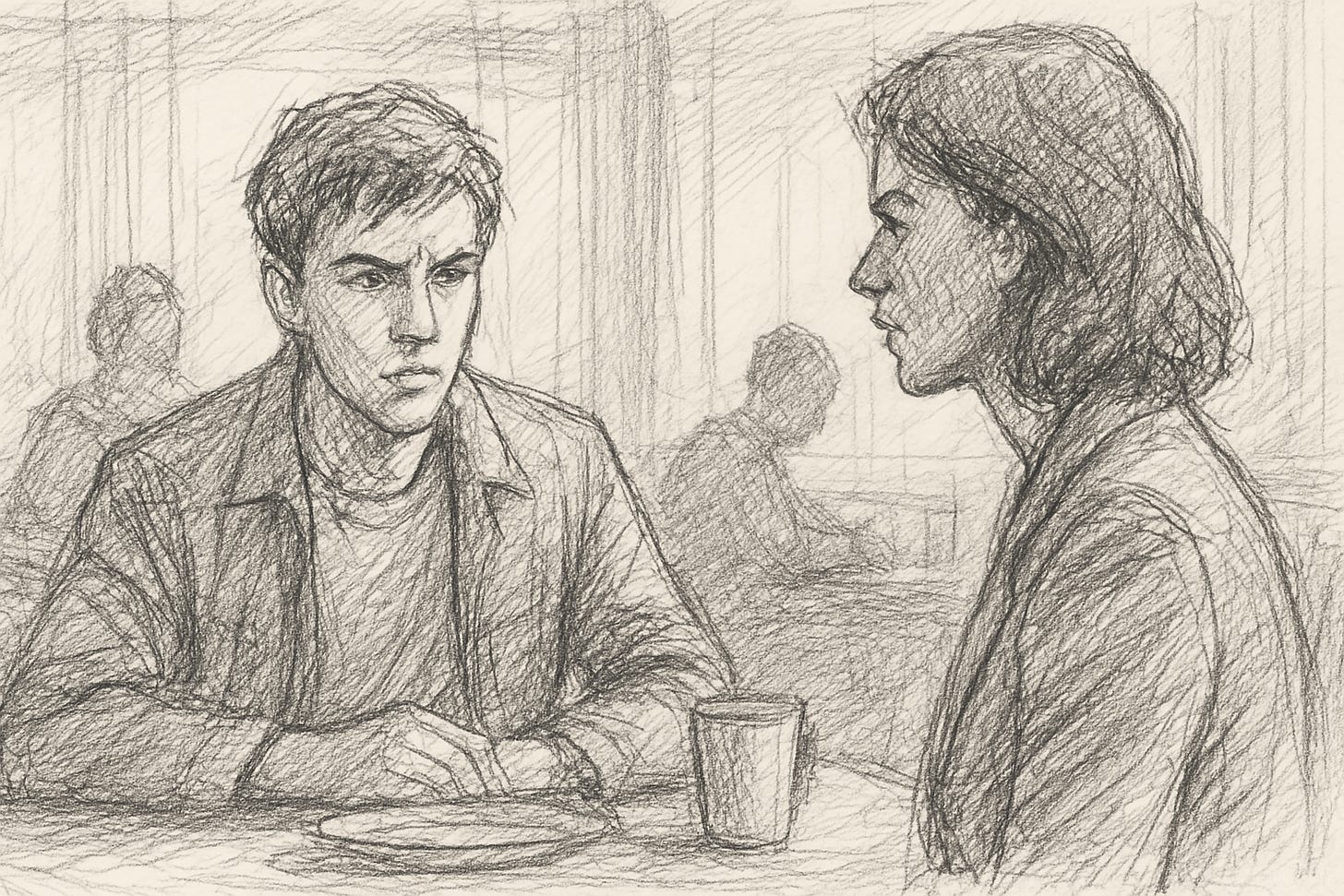

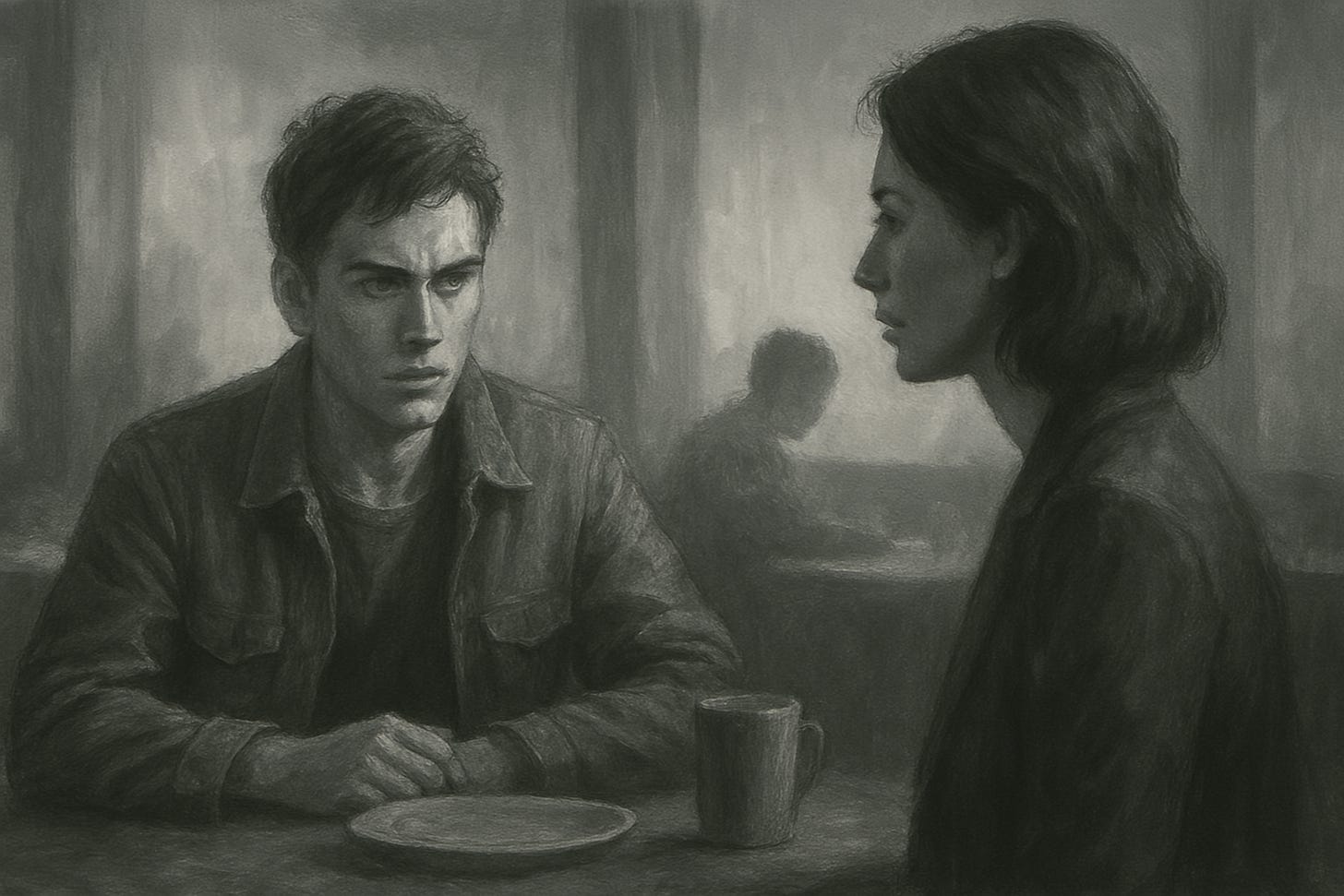

Director: "Exactly. Now, for his look—I’m picturing blending the youthful, thoughtful features of Timothée Chalamet with the more rugged, classic charm of Logan Lerman"

AI Assistant: "like this?"

Director: "Yes, that’s it. Also, his clothing should look practical, maybe a bit worn, but not sloppy—like he’s been through a lot but still holds himself together."

AI Assistant: "Applying a layered look—durable jacket, slightly faded, but well-fitted. Adding subtle texture to hint at wear without looking disheveled."

Director: "Perfect. And physically, I want him to stay composed. Maybe his shoulders tense up a bit, like he’s bracing himself. He’s not completely calm, but he’s forcing himself to stay steady.

AI Assistant: "Adjusting his posture to be more upright but with a bit of tension in the shoulders. Should I keep his eye contact steady, or let it falter briefly?"

Director: "Keep it steady. He doesn’t want to show any weakness, but his eyes should still look like they’re holding back a storm."

AI Assistant: "Understood. Enhancing the focus in his gaze but softening the edges to give that sense of restraint."

Director: "Good. And when he finally says the line, I want it almost like he’s saying it more to himself than to the other person—like he’s convincing himself to keep going."

AI Assistant: "Lowering the volume slightly on that line, giving it a more introspective tone. Should I add a small breath at the end to signify a moment of release?"

Director: "Yeah, that’s perfect. Let’s see how that feels."

AI Assistant: “Shall I imprint this in the Character Bibles”

Director: “Ok, yeah print.”

Cinematographer’s Adjustments - Enhancing Spatial Clarity:

Cinematographer: "I’m thinking the shot feels too flat. Can we pull back slightly to add some space between the characters and their surroundings?"

AI Assistant: "Understood. Adjusting the camera distance by half a meter. Would you like a slight tilt to follow the protagonist’s movement?"

Cinematographer: "Yes, just a subtle upward tilt as he stands up."

AI Assistant: "Rendering... Here’s the updated movement."

Cinematographer: "That’s more dynamic. It follows the action naturally."

Editor’s Adjustments - Improving Dialogue Pacing:

Editor: "The dialogue pacing feels rushed. Can we add a tiny pause between the protagonist’s line and the antagonist’s reaction?"

AI Assistant: "Sure, inserting a 0.5-second pause between lines. Do you want me to elongate the reaction shot as well?"

Editor: "Yes, just by a few frames to give it more weight."

AI Assistant: "Done. Playing back the updated cut now."

Editor: "That’s better. The reaction feels more deliberate."

Sound Designer’s Adjustments - Adding Ambient Depth:

Sound Designer: "The dialogue sounds too isolated. Can we bring in a subtle background hum, like an AC unit, to fill the space?"

AI Assistant: "Adding ambient room noise with a low hum. Volume set at 15%. How does that sound?"

Sound Designer: "That works. Now, can we make the protagonist’s footsteps a bit sharper when he moves to the window?"

AI Assistant: "Increasing the clarity of the footstep foley and adding a slight reverb to match the room size."

Sound Designer: "Much better. It makes his movement feel more purposeful."

AI Suggestions - Subtle Enhancements:

AI Analysis: "I noticed that the supporting music is occupying the same frequency range as the male actor’s voice, making him harder to hear. Shall I apply dynamic ducking and set a wider mix for the music, prioritizing the dialogue in the center channel?"

Sound Designer: "Yeah, that sounds like it would help. Go ahead and do it."

AI Assistant: "Applied. The music now dips slightly during dialogue without losing presence."

Sound Designer: "That’s cleaner. Nice catch."

AI Analysis: "I noticed that some foley elements, like footsteps and fabric movement, are not accurately synced with the character’s actions. Shall I remap the foley to align better with the character’s motion?"

Sound Designer: "Yes, that’s been bothering me too. Please do."

AI Assistant: "Remapping and adjusting the timing... Done. The footsteps now match the pacing of the movement, and fabric rustle is in sync."

Sound Designer: "Much better. It feels more natural now."

AI Analysis: "In reviewing the mood board, I noticed several images featured a subtle rim light on the character’s silhouette, creating a more defined outline. Would you like me to apply a similar lighting effect in this shot?"

Cinematographer: "Yeah, that would give him more presence. Go for it."

AI Assistant: "Adding a soft, subtle rim light to separate the character from the background. Adjusted for intensity to avoid harshness."

Cinematographer: "Nice, that adds just the right amount of pop."

AI Analysis: "The color grading currently leans toward a cooler tone, which might feel detached given the emotional nature of the scene. Would you prefer a slightly warmer grade to enhance the intimate atmosphere?"

Director: "Good call. Let’s warm it up, but not too much—just enough to feel more personal."

AI Assistant: "Shifting the color balance slightly toward warmth. How does this feel?"

Director: "That’s better—more inviting, but still serious."

Subsequent Rounds:

Each round builds on the last, focusing on fine-tuning character dynamics and performance. The team revisits character interactions, adjusts shot compositions, and makes slight modifications to vocal tone and sound balance. The AI continues to propose subtle improvements to enhance naturalism and coherence.

The goal of this first session is to ensure the characters and scene feel alive and consistent, setting the foundation for more detailed work in later sessions.

This first session would be used as data to help the AI guide new sessions with new scenes faster - e.g. like lighting, characters, and other things are held constant once they are established.

The team iterates as many times as needed until they all feel the vibe is right. Once consensus is reached, they lock the character dynamics and move on to the next session.

Scene Two and Beyond

After completing Scene 1, a similar collaborative process is applied to each subsequent scene. The team continues to iterate through rounds, refining characters, visuals, pacing, and sound. However, as the scenes progress, the AI becomes increasingly intelligent about the established look, feel, and character dynamics. The AI actively learns from the adjustments made in earlier scenes, building a style map that ensures consistency across the entire project. This adaptive approach allows changes made to one scene to automatically inform and update related scenes, maintaining coherence throughout the film.

How the AI Learns and Propagates Changes:

Scene Continuity - The AI maps lighting, color grading, and camera movement from Scene 1, recognizing it as the baseline style for the entire project. As new scenes are developed, the AI references previous decisions to maintain visual and tonal consistency.

If the lighting for the protagonist’s close-up is adjusted to be more dramatic in Scene 1, the AI will automatically replicate this lighting style in similar shots throughout the film.

Character Consistency - The AI continuously updates its model of each character’s appearance, demeanor, and voice. If the director decides to slightly alter the protagonist’s look or voice in Scene 3, the AI will prompt to update earlier scenes accordingly.

If the director wants the protagonist’s hair to appear more disheveled in a later scene to reflect exhaustion, the AI can propagate this change across previous scenes, ensuring a consistent visual evolution.

Cinematography Adaptation - The AI builds a visual profile for each location, including lighting setups, camera angles, and shot compositions. If a decision is made to change the way a location is shot—for instance, adding a handheld camera feel to heighten tension—the AI updates all related scenes to maintain that cinematic language.

If the director decides that all scenes in the underground bunker should have a dim, flickering light, the AI will automatically apply this adjustment to every bunker scene throughout the film.

Sound and Foley Mapping - The AI tracks ambient sound profiles, character audio levels, and foley effects used in each scene. When sound adjustments are made—like changing the reverb to make dialogue clearer—the AI ensures that similar settings are applied throughout related scenes.

If the decision is made to enhance the echo effect in the warehouse scenes for a more cavernous feel, the AI will automatically implement this across every scene in that location.

Pacing and Editing Flow - The AI learns the rhythm established in earlier scenes, maintaining a consistent editing pace. Changes to the pacing or cut style are automatically carried over to maintain storytelling coherence.

If the director decides to slow down the reaction shots for a more dramatic effect, the AI applies this timing adjustment wherever similar reactions occur in later scenes.

Instant Propagation - The AI ensures that once a change is approved in one scene, it is instantly applied to every other instance where it logically fits. The team is prompted to review these propagated changes to ensure they align with the evolving vision.

If the team decides to introduce a specific color grade for flashback scenes, the AI will update every flashback across the film to reflect this unified visual approach.

By leveraging this adaptive, evolving process, Vibe Directing not only ensures creative continuity but also significantly reduces the time spent on repetitive adjustments. As the film progresses, the AI becomes a more intuitive and indispensable part of the creative team, allowing the filmmakers to focus on storytelling while the AI maintains stylistic integrity.

The Paradigm Shift of Vibe Directing

Vibe Directing revolutionizes the filmmaking process by allowing a small, agile crew of just five people to collaboratively build a film in iterative steps. Unlike traditional production methods, which often involve large teams working in segmented phases, Vibe Directing enables real-time creativity and continuous refinement. This approach not only accelerates production but also allows for audience feedback to be seamlessly integrated, with changes propagating instantly across all scenes. As a result, films can be shaped dynamically, maintaining consistency while staying responsive to creative insights and audience reactions.

Small, Agile Team - A compact crew of five (director, cinematographer, editor, sound designer, AI assistant) can collaboratively build a film in real time, eliminating the need for large, segmented teams and lengthy post-production.

Iterative Creation - Scenes are crafted in rounds, with each iteration improving slightly on the last. This method keeps the creative energy flowing, reducing the pressure of perfection on the first pass.

Real-Time Collaboration - All team members work concurrently, sharing insights and making adjustments in the same session, which fosters creativity and quick problem-solving.

Immediate Propagation - Once changes are approved, the AI propagates them instantly across all relevant scenes, ensuring consistency without manually updating every shot.

Audience Testing and Feedback - Each version of a scene can be tested with audiences, gathering real-world reactions before finalizing. Adjustments based on feedback are quickly implemented and immediately consistent across the entire film.

Adaptive AI Assistance - The AI assistant not only helps make changes faster but also learns the creative vision as it evolves, providing smarter, context-aware suggestions.

Enhanced Consistency - The AI tracks visual styles, character continuity, and pacing throughout the project, automatically maintaining cohesion as the film develops.

Faster Turnaround - The iterative, concurrent nature of Vibe Directing significantly reduces production time while maintaining high-quality output, making it ideal for independent filmmakers and rapid content creation.

Flexible and Responsive - Whether it’s altering a character’s look, adjusting a scene’s mood, or fine-tuning the pacing, every change can be applied across the film without disrupting continuity or creative intent.

So…. When?

The concept of Vibe Directing might seem futuristic, but we are closer than many think. With the recent upgrade to ChatGPT that introduced inline conversational image editing, and the debut of ReveAI—a tool enabling real-time, conversational adjustments to images—we are seeing the early building blocks of a fully multimodal, interactive video editing environment. Given that AI capabilities tend to leap forward approximately every 8 months, the progression from image-based interactions to full video editing is likely just two more development cycles away. That means we could realistically see a conversational video editor within the next 16 months—less than three years from now. As AI continues to evolve at this rapid pace, Vibe Directing could become a practical reality sooner than anticipated, fundamentally transforming the filmmaking process.